Updated · Jan 10, 2024

Updated · Oct 25, 2023

Muninder Adavelli is a core team member and Digital Growth Strategist at Techjury. With a strong bac... | See full bio

Lorie is an English Language and Literature graduate passionate about writing, research, and learnin... | See full bio

Web scraping is the automated process of extracting data from web pages. One of the challenges when scraping is handling massive amounts of data as it takes time—especially with over 1.145 trillion MB of new data on the web daily.

When dealing with huge volumes of data, Excel becomes crucial. The spreadsheet app is an excellent data analysis tool with valuable functions and features that help in wrangling extracted data.

In this article, you will learn how to scrape data from websites to Excel. Keep reading!

|

🔑 Key Takeaways

|

Web scraping is a helpful tool for business and research. People scrape websites for several reasons, including:

There are four standard methods to extract data into Excel. Each of these methods has its advantages and disadvantages. These methods are:

Continue reading to know how each method works.

You can scrape websites to Excel by manually copying and pasting the data. This method is simple, but it can be slow when handling large amounts of data.

Here’s an easy guide on how to scrape sites to Excel manually:

Step 1: Open the website you want in your browser.

Step 2: Go to the information you want to extract.

Step 3: Highlight the data with your mouse. Right-click and select “Copy” or use “Ctrl + C” on your keyboard.

Step 4: Open Excel.

Step 5: Paste the information you copied.

Step 6: Fix the formatting based on your preference.

|

✅ Pro Tip When pasting data into Excel, use the “Format Cells” feature. It lets you choose how you want the data to be and helps you avoid mistakes. |

Automated data extraction to Excel involves using web scrapers. You can add scrapers to your browsers or use them as separate programs.

These tools collect the data for you and put it into an Excel file. They make the process quicker, and they are better at handling larger amounts of data.

|

⚠️ Warning Scraping too much data in one session can slow websites down or crash them. It is best to divide the data into smaller sessions, even if you can scrape them in seconds. |

Here’s a simple guide on automatically extracting web data to Excel with scraper tools:

Step 1: Choose and install a web scraping tool. Some of the best tools you can use are:

Step 2: Open the tool and start a new project.

Step 3: Go to the website you want to scrape.

Step 4: Choose what you want to scrape, like product prices, reviews, or details.

Step 5: Run the tool to extract and save the data in an Excel file.

|

✅ Pro Tip Using a proxy server while scraping is highly recommended to mask your IP address. A proxy helps prevent IP blocks from your target's anti-scraping security. |

Excel Visual Basic for Applications (VBA) is another method to consider when scraping sites to Excel. It involves writing codes that automate the web scraping process. Programmers often use this method to customize scraping and improve data management.

Using VBA for web scraping is more complex, but it offers better customizability. This method is also more suited for scraping large amounts of data.

Here’s a simple step-by-step guide for scraping data to Excel using Excel VBA:

Step 1: Go to the website you want to scrape. Copy its URL. Take note of what you want to scrape.

Step 2: Open the Visual Basic Editor in Excel. Press Alt + F1, and right-click on the project explorer.

Step 3: Select Insert, then Module. This window is where you will write and use your VBA codes.

Step 4: Declare the variables that you need (e.g., website URL) and the element of what you want to scrape.

|

Dim url As String Dim html As New HTMLDocument Dim topics As Object |

Step 5: Use VBA code to send HTTP requests to the website and get the page's HTML source code.

|

url = “https://website-url.com” With Create object(“MSXM2.XMLHTTP”) .Open “GET”, url, False .send html.body.innerHTML =.responseText End With |

Step 6: Extract the data from the website.

Step 7: To see the scraped data, check the Immediate Window.

|

✅ Pro Tip Always use the Immediate Window tool when scraping with Excel VBA. This feature helps you fix errors and makes your code work better. |

Web queries are a robust tool in Excel that helps you extract data from the web and put it into your spreadsheet. Using a web query allows you to collect and update data automatically.

Below are the steps to pull data from websites to Excel using web queries:

Step 1: Open Microsoft Excel. Click on the Data tab at the top of the screen.

Step 2: Choose the From web option in the Get & transform data section.

Step 3: Paste the URL of the website that you want to scrape and click Go.

Step 4: Excel will open the webpage and show you a preview of the data you can scrape. Use your mouse to select the data you want to bring to Excel.

Step 5: Click load to put the data into Excel. If you want to edit the data's appearance, click Edit to make changes.

Step 6: Give the data a name. If needed, select Properties to choose when to get new data and other parameters.

You can set up a web query to get new data automatically on a set interval, like daily or weekly. This lets you save time compared to setting a query up manually every time.

|

✅ Pro Tip Instead of scraping huge volumes of data simultaneously, go for smaller and specific pieces for a better and faster process. |

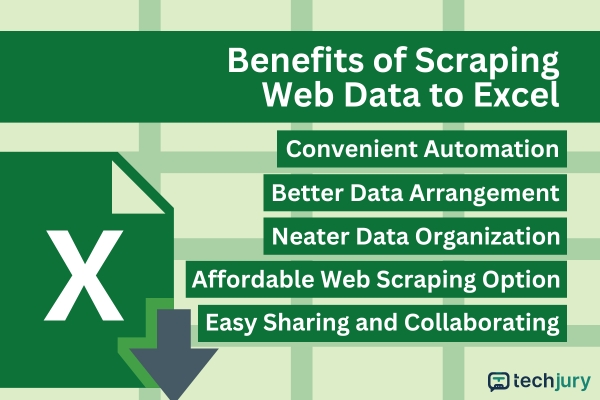

Web scraping has been a handy process since 1989, and it became more efficient with Excel. Listed below are some of the benefits of scraping web data to Excel.

Convenient Automation

Depending on the setup, web scraping can make data collection automatic. With Excel, repetitive tasks like copying and pasting are also automated, saving you more time to do other essential tasks.

|

⚠️ Warning Always look into a website's scraping rules and regulations before starting with your scraping projects. Violating a site’s terms may come with legal consequences. |

Better Data Arrangement

With Excel, you can make your data look simple and easy to understand. You can also change how the data looks, making it fit your needs perfectly.

Neater Data Organization

Excel helps you organize your data neatly in workbooks or sheets. You can also use Excel’s built-in tools to sort and find any piece of information quickly.

Easy to Share and Collaborate

Around 750 million people use Excel, so data sharing in the platform is convenient. Users can access the data or work together to study, edit, or fix the data.

Affordable Option

Using Excel to scrape data on the Internet saves money since you won’t have to purchase specialized software.

|

👍 Helpful Article If budget is an issue, you can try the Scraping Browser for your tasks. This tool automates data extraction like any paid scraper but at a lower price than APIs. |

There are different ways to extract data from the Internet and integrate it into Excel. Whether it is via manual copying, efficient scraper tools, Excel VBA, or web queries, Excel proves to be a flexible ally in data extraction.

Scraping sites to Excel comes with a lot of benefits. It is cost-effective, simplifies data formatting, supports organization, and facilitates collaboration.

However, it is crucial to always adhere to ethical scraping practices and respect website rules to avoid legal repercussions and site disruptions.

The best way to scrape data to Excel is by using special tools like Bright Data or NimbleWay, which allows you to choose the data you want and transfer them to Excel.

Python is the easiest and most popular language for web scraping. It has libraries like BeautifulSoup and Scrapy that make web scraping even easier.

Web scraping is a skill that companies need to gain data for research and decision-making. It requires technical knowledge of programming languages, tools, and libraries.

Your email address will not be published.

Updated · Jan 10, 2024

Updated · Jan 09, 2024

Updated · Jan 05, 2024

Updated · Jan 03, 2024